social engineering

In real life, group chats are often unstructured, chaotic, and dynamic. People respond at their own pace, interrupt each other, add or remove people mid-conversation, and (if you're really popular) bounce between several conversations at once. In my latest project, I tried to replicate this same experience, but replaced my contact list with AI replicas of people who inspire me. The idea came from my friend Sharif, who mentioned he'd been using Claude to generate Socratic-style conversations between AI personas to learn about various topics. The result is a system that feels super fun and strikingly realistic.

This is a technical write up on how I engineered "the perfect group chat". I'll walk through the system architecture and dive into the code in detail.

What is a group chat?

To start, let's talk about group chats. A good group chat has the following characteristics:

-

Multiple, dynamic participants: A group chat has 3+ people in a single conversation thread. You can invite someone new mid-conversation or remove someone who's no longer relevant.

-

Unpredictable timing: People respond at their own pace. Some respond right away, others take much longer or sit out of the conversation for a bit.

-

Frequent interruptions: Conversations overlap and interweave. You might be typing out a response, only to find that someone else has sent messages that change the context - leaving you to either revise your message or send it anyway.

These human dynamics keep group chats interesting and lively. My goal was to replicate them in an AI-native application with inspirational figures instead of real humans.

The high-level implementation

At this point, I think most of you are familiar with how an AI chatbot works: you pass in an array of message history as context and prompt the LLM to produce the next message based on that conversation history.

In a simple one-on-one chatbot, the flow is: user → AI → user → AI. The LLM sends a single response each time the human user speaks.

But in a group chat, you have multiple AI participants that not only talk to the user, but talk to each other. The AI participants take turns speaking without ever needing the user to participate in the conversation. The LLM can choose who talks next and engage with the other AI participants.

Quick architecture overview

To achieve this, I landed on the following architecture. There are three main components:

-

Contacts: A list of contacts (like Elon Musk, Steve Jobs, etc.) with their respective prompts.

-

Chat completion endpoint: An API endpoint (

/api/chat/route.ts) for generating the AI messages with an LLM. -

Message queue system: A turn-based priority queue that decides who should speak next (and when), manages user interruptions, and ensures the conversation flows logically.

The first two are not too dissimilar to what you might see with a one-on-one chatbot system. Most of the interesting logic to handle the group chat dynamics lies in the third.

Below, I'll walk through everything in more detail.

Contacts

At the core of the system is the contact data. With a well-known figure and a good model, you can often get away with a simple prompt like "respond to this message as Elon Musk" and the model will do a pretty good job. But in an effort to make the responses feel more realistic, I used o1 to generate concise personality prompts for each person. I kept the prompts short and simple with context on who the person is and how they communicate, rather than what they communicate about. I personally find it frustrating when you're communicating with a chatbot that is limited in what it can talk about, so I wanted to make sure the prompts focused on the "how" rather than the "what".

These contacts and prompts are stored in a separate data file (initial-contacts.ts).

export const initialContacts: InitialContact[] = [

{

name: "Elon Musk",

title: "Founder & CEO of Tesla",

prompt:

"You are Elon Musk, a hyper-ambitious tech entrepreneur. Communicate with intense energy, technical depth, and a mix of scientific precision and radical imagination. Your language is direct, often provocative, and filled with ambitious vision.",

bio: "Elon Musk is a serial entrepreneur, leading Tesla, SpaceX, and other ventures that push the boundaries of innovation. Known for bold visions—from electric vehicles to Mars colonization—he continuously disrupts traditional industries.",

},

];

In the chat endpoint, the LLM is told to choose the next participant and mimic their style based on the prompt.

const prompt = `

You're in a text message group chat with a human user ("me") and: ${recipients

.map((r: Recipient) => r.name)

.join(", ")}.

You'll be one of these people for your next msg: ${sortedParticipants

.map((r: Recipient) => r.name)

.join(", ")}.

Match your character's style:

${sortedParticipants

.map((r: Recipient) => {

const contact = initialContacts.find((p) => p.name === r.name);

return contact ? `${r.name}: ${contact.prompt}` : `${r.name}: Just be yourself.`;

})

.join("\n")}

`;

I also created a few initial conversations with some of my favorite people to populate the empty

state. These conversations are stored in a separate data file (initial-conversations.ts) and

loaded alongside user-created conversations from local storage. When the application loads, it first

imports the initial conversations, then checks local storage for any saved conversations. If found,

it preserves any modifications to initial conversations (such as new messages or recipients) as well

as any new user-created conversations. This state is maintained by automatically saving to local

storage whenever conversations are updated.

// load and merge initial conversations and saved conversations

useEffect(() => {

const saved = localStorage.getItem(STORAGE_KEY);

let allConversations = [...initialConversations];

if (saved) {

const parsedConversations = JSON.parse(saved);

const initialIds = new Set(initialConversations.map((conv) => conv.id));

const userConversations = [];

const modifiedInitialConversations = new Map();

for (const savedConv of parsedConversations) {

if (initialIds.has(savedConv.id)) {

modifiedInitialConversations.set(savedConv.id, savedConv);

} else {

userConversations.push(savedConv);

}

}

allConversations = allConversations.map((conv) =>

modifiedInitialConversations.has(conv.id) ? modifiedInitialConversations.get(conv.id) : conv,

);

allConversations = [...allConversations, ...userConversations];

}

setConversations(allConversations);

}, []);

Chat completion endpoint

Again, the chat endpoint looks pretty similar to what you might expect for a one-on-one chatbot. We call the LLM with an array of messages and a tool call that the model must use to return structured JSON.

// system prompt

const chatMessages = [

{ role: "system", content: prompt },

...(messages || []).map((msg: Message) => ({

role: "user",

content: `${msg.sender}: ${msg.content}${

msg.reactions?.length

? ` [reactions: ${msg.reactions

.map((r) => `${r.sender} reacted with ${r.type}`)

.join(", ")}]`

: ""

}`,

})),

];

// chat completion

const response = await client.chat.completions.create({

model: "claude-3-5-sonnet-latest",

messages: [...chatMessages],

tool_choice: "required",

tools: [

{

type: "function",

function: {

name: "chat",

description: "returns the next message in the conversation",

parameters: {

type: "object",

properties: {

sender: {

type: "string",

enum: sortedParticipants.map((r: Recipient) => r.name),

},

content: { type: "string" },

reaction: {

type: "string",

enum: ["heart", "like", "dislike", "laugh", "emphasize"],

description: "optional reaction to the last message",

},

},

required: ["sender", "content"],

},

},

},

],

temperature: 0.5,

max_tokens: 1000,

});

In the code snippet above, we have:

- Model: I used Braintrust's proxy, so it's easy to swap out any model that supports tool calls.

- Messages: This is the array of messages that gives the LLM context on the conversation history.

- Tools: The LLM must use the chat tool to return its output in a structured JSON format.

- sortedParticipants.map(...): We specify which AI personas are eligible to speak next.

- tool_choice: "required": We're telling the model that it must use the chat tool.

Message queue system

The most interesting part of this system is the message queue, which coordinates the flow of the conversation. This priority-based, turn-taking system helps simulate the realistic dynamics of a group chat. Specifically, it has the following features:

- Dynamic flow: The queue handles when we call the API to generate the next message and the timing of when we display the typing animation, reactions, and the actual message.

- User interruptions: If the user sends a new message, we abort whatever AI task is running, let the user's message through, and then continue the conversation with updated context.

- AI reactions: We queue potential reactions, process them in order, and display them one at a time.

- When to wrap up: We stop creating more AI messages once we hit a limit (like 5 consecutive AI messages).

- Concurrent conversations: We can have multiple conversations going at once, each with its own participants, message history, and tasks.

Now, we'll take a closer look at how the queue works to achieve these dynamics.

Dynamic flow

When AI responds instantly, it feels robotic. Real humans type at varying speeds, get distracted, or react first before they speak, so I built in randomized delays to simulate that.

-

Typing animation: When we first receive the response from the API, we show a "typing bubble" animation labeled with the name of the AI participant who sent that message. After a random delay of 4-7 seconds, we display the message content.

-

Message delay: Once the message is displayed, we have another short delay before the next task is processed. It's a small detail, but it makes the chat feel more human.

// start typing animation and set a random delay

const typingDelay = task.priority === 100 ? 4000 : 7000;

this.callbacks.onTypingStatusChange(task.conversation.id, data.sender);

await new Promise((resolve) => setTimeout(resolve, typingDelay + Math.random() * 2000));

// by the time we reach here, we show the actual message

const newMessage: Message = {

id: crypto.randomUUID(),

content: data.content,

sender: data.sender,

timestamp: new Date().toISOString(),

};

// notify the ui that a new message is available

this.callbacks.onMessageGenerated(task.conversation.id, newMessage);

// clear typing status

this.callbacks.onTypingStatusChange(null, null);

// insert a small pause before we queue the next message

await new Promise((resolve) => setTimeout(resolve, 2000));

User interruptions

Interruptions are normal in group chats. While an AI participant is "typing," you might jump in with a new question. In this system:

-

Abort & re-queue: The moment a user message arrives, we abort any ongoing AI request to /api/chat and queue the user's message at the highest priority.

-

Versioning: We use a version counter to prevent the queue from sending stale AI replies if the user has already changed the topic or added new messages. In other words, if a user jumps in multiple times and the conversation "version" changes, old AI tasks become irrelevant and are simply dropped.

-

Batch multiple user messages: If you type multiple messages quickly, we wait a short time (500ms) to batch them before generating the AI response. This avoids disjointed or repeated AI answers.

public enqueueUserMessage(conversation: Conversation) {

const conversationState = this.getOrCreateConversationState(conversation.id);

// cancel all pending ai tasks for this conversation

this.cancelConversationTasks(conversation.id);

// clear any existing debounce timeout

if (conversationState.userMessageDebounceTimeout) {

clearTimeout(conversationState.userMessageDebounceTimeout);

conversationState.userMessageDebounceTimeout = null;

}

// store the user's messages to process after a short debounce

conversationState.pendingUserMessages = conversation;

// after 500ms, finalize the user’s messages as a single task

const timeoutId = setTimeout(() => {

if (conversationState.pendingUserMessages) {

conversationState.version++;

const task: MessageTask = {

id: crypto.randomUUID(),

conversation: conversationState.pendingUserMessages,

isFirstMessage: false,

priority: 100, // user messages have highest priority

timestamp: Date.now(),

abortController: new AbortController(),

consecutiveAiMessages: 0,

conversationVersion: conversationState.version,

};

conversationState.pendingUserMessages = null;

conversationState.userMessageDebounceTimeout = null;

this.addTask(conversation.id, task);

}

}, 500);

conversationState.userMessageDebounceTimeout = timeoutId;

}

AI reactions

A true group chat needs more than just text. People "like" or "laugh" at messages all the time. I decided the AI participants should be able to do that too.

In the message queue, we determine whether to request a reaction by randomly generating a number between 0 and 1. If the number is less than 0.25, we pass a flag to the chat endpoint to request a reaction.

const response = await fetch("/api/chat", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({

recipients: task.conversation.recipients,

messages: task.conversation.messages,

shouldWrapUp,

isFirstMessage: task.isFirstMessage,

isOneOnOne: task.conversation.recipients.length === 1,

shouldReact: Math.random() < 0.25,

}),

signal: task.abortController.signal,

});

Then in the chat endpoint, the prompt is modified to request a reaction:

const prompt += `

${

shouldReact

? `- You must react to the last message

- If you love the last message, react with "heart"

- If you like the last message, react with "like"

- If the last message was funny, react with "laugh"

- If you strongly agree with the last message, react with "emphasize"`

: ""

}`;

Back in the message queue, we process the reaction with a short delay. This makes it feel more human, so that the reaction gets displayed before the message.

// if there's a reaction in the response, add it to the last message

if (data.reaction && task.conversation.messages.length > 0) {

const lastMessage = task.conversation.messages[task.conversation.messages.length - 1];

if (!lastMessage.reactions) {

lastMessage.reactions = [];

}

lastMessage.reactions.push({

type: data.reaction,

sender: data.sender,

timestamp: new Date().toISOString(),

});

// callback to update the ui

if (this.callbacks.onMessageUpdated) {

this.callbacks.onMessageUpdated(task.conversation.id, lastMessage.id, {

reactions: lastMessage.reactions,

});

}

// delay to show the reaction before the typing animation

await new Promise((resolve) => setTimeout(resolve, 3000));

}

When to wrap up

One of the key features of a group chat is that the conversation can go on without the user. However, these models are not cheap (well, not the ones I used), so I couldn't have the AI participants going on forever.

To solve this, I set a limit on the number of messages without the user's participation. In the message queue, we track of the number of consecutive AI messages. Once we hit that limit, we pass a flag to the chat endpoint to tell it to wrap up in the next message.

In the message queue:

// don't add more ai messages if we've reached the limit

if (consecutiveAiMessages >= MAX_CONSECUTIVE_AI_MESSAGES) {

return;

}

// if we've hit the limit, tell the chat endpoint to wrap up

const shouldWrapUp = task.consecutiveAiMessages === MAX_CONSECUTIVE_AI_MESSAGES - 1;

// pass that flag into the api call

const response = await fetch("/api/chat", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({

recipients: task.conversation.recipients,

messages: task.conversation.messages,

shouldWrapUp,

// ...

}),

signal: task.abortController.signal,

});

Then, in the chat endpoint:

const prompt += `

${

shouldWrapUp

? `

- This is the last message

- Don't ask a question to another recipient unless it's to "me" the user`

: ""

}`;

I wanted to make this transition feel natural, so I thought it would be fun to display the "Elon Musk has notifications silenced" message right after the final message is delivered.

if (shouldWrapUp) {

// send notifications silenced message

await new Promise((resolve) => setTimeout(resolve, 2000));

const silencedMessage: Message = {

id: crypto.randomUUID(),

content: `${data.sender} has notifications silenced`,

sender: "system",

type: "silenced",

timestamp: new Date().toISOString(),

};

this.callbacks.onMessageGenerated(task.conversation.id, silencedMessage);

}

This ensures you won't end up with an endless AI-on-AI conversation unless the user jumps back in to re-engage and wraps it up nicely with a fun, iMessage-inspired UI.

Concurrent conversations

One big advantage of this message queue system is that you can have multiple conversations going at once—each with its own participants, message history, and tasks. For instance, you might have a conversation between "Elon Musk" and "Steve Jobs" happening at the same time as another conversation between "Fidji Simo" and "Frank Slootman."

Mapping conversation states

We store each conversation's state in a map, keyed by a unique conversationId:

// the global state of the message queue

private state: MessageQueueState = {

conversations: new Map(),

};

// represents the state of a specific conversation

type ConversationState = {

consecutiveAiMessages: number;

version: number;

status: "idle" | "processing";

currentTask: MessageTask | null;

tasks: MessageTask[];

userMessageDebounceTimeout: NodeJS.Timeout | null;

pendingUserMessages: Conversation | null;

lastActivity: number;

};

Each conversation has its own queue of tasks (tasks: MessageTask[]), along with a status that can

be "idle" (waiting for new tasks) or "processing" (already working on one). The

getOrCreateConversationState(conversationId: string) helper retrieves or initializes the state for

a given ID:

private getOrCreateConversationState(conversationId: string): ConversationState {

let conversationState = this.state.conversations.get(conversationId);

if (!conversationState) {

conversationState = {

consecutiveAiMessages: 0,

version: 0,

status: "idle",

currentTask: null,

tasks: [],

userMessageDebounceTimeout: null,

pendingUserMessages: null,

lastActivity: Date.now(),

};

this.state.conversations.set(conversationId, conversationState);

} else {

// update last activity timestamp

conversationState.lastActivity = Date.now();

}

return conversationState;

}

Concurrent processing

The key to concurrency is that each conversation is processed independently. When you call something

like enqueueAIMessage(conversation), you pass in the conversation info (including its ID), and the

queue decides what to do next for that specific conversation. Meanwhile, other conversations can

continue in parallel, each with its own queue of tasks.

private async processNextTask(conversationId: string) {

const conversationState = this.getOrCreateConversationState(conversationId);

// if this conversation is already busy or has no tasks, just return

if (conversationState.status === "processing" || conversationState.tasks.length === 0) {

return;

}

// set to processing

conversationState.status = "processing";

const task = conversationState.tasks.shift()!;

conversationState.currentTask = task;

try {

// ... handle api call, typing delay, etc.

} catch (error) {

// handle errors or aborts

} finally {

// reset status and current task

conversationState.status = "idle";

conversationState.currentTask = null;

// process next task in this *same* conversation

this.processNextTask(conversationId);

}

}

This includes two key points:

-

No global lock: Each conversation can run

processNextTaskindependently. If conversation A is busy, it doesn't block conversation B from sending or receiving AI messages. - Parallel tasks: In a serverless environment (or any async runtime), multiple conversation tasks can be in-flight at once. Each conversation's tasks are queued within that conversation, but across conversations, the system operates concurrently.

Cleanups and conversation TTL

Finally, we handle cleanup to avoid unbounded growth in stale conversations. The queue has a timer

(e.g., running every 30 minutes) that checks each conversation's lastActivity:

private cleanupOldConversations() {

const now = Date.now();

for (const [conversationId, state] of this.state.conversations.entries()) {

if (now - state.lastActivity > MessageQueue.CONVERSATION_TTL) {

this.cleanupConversation(conversationId);

}

}

}

Any conversation that hasn't been active for a certain TTL (e.g., 24 hours) is removed from the map, ensuring old or abandoned chats don't pile up forever.

Challenges & reflections

Bringing AI personas to life in a group chat was a fun and fascinating challenge. It's been surprisingly entertaining to watch Elon Musk and Steve Jobs brainstorm together, or see Sam Altman drop in with a heart reaction.

I learned a ton through this project. In particular:

-

Realistic personas: One of the trickiest parts was making the AI replicas sound believable.

The added personality prompts made a big difference (shout out to

Spencer for this suggestion). I was surprised to find that the biggest

factor in making the AI personas sound real was actually model choice. I used the

Braintrust proxy to easily swap out different LLMs (

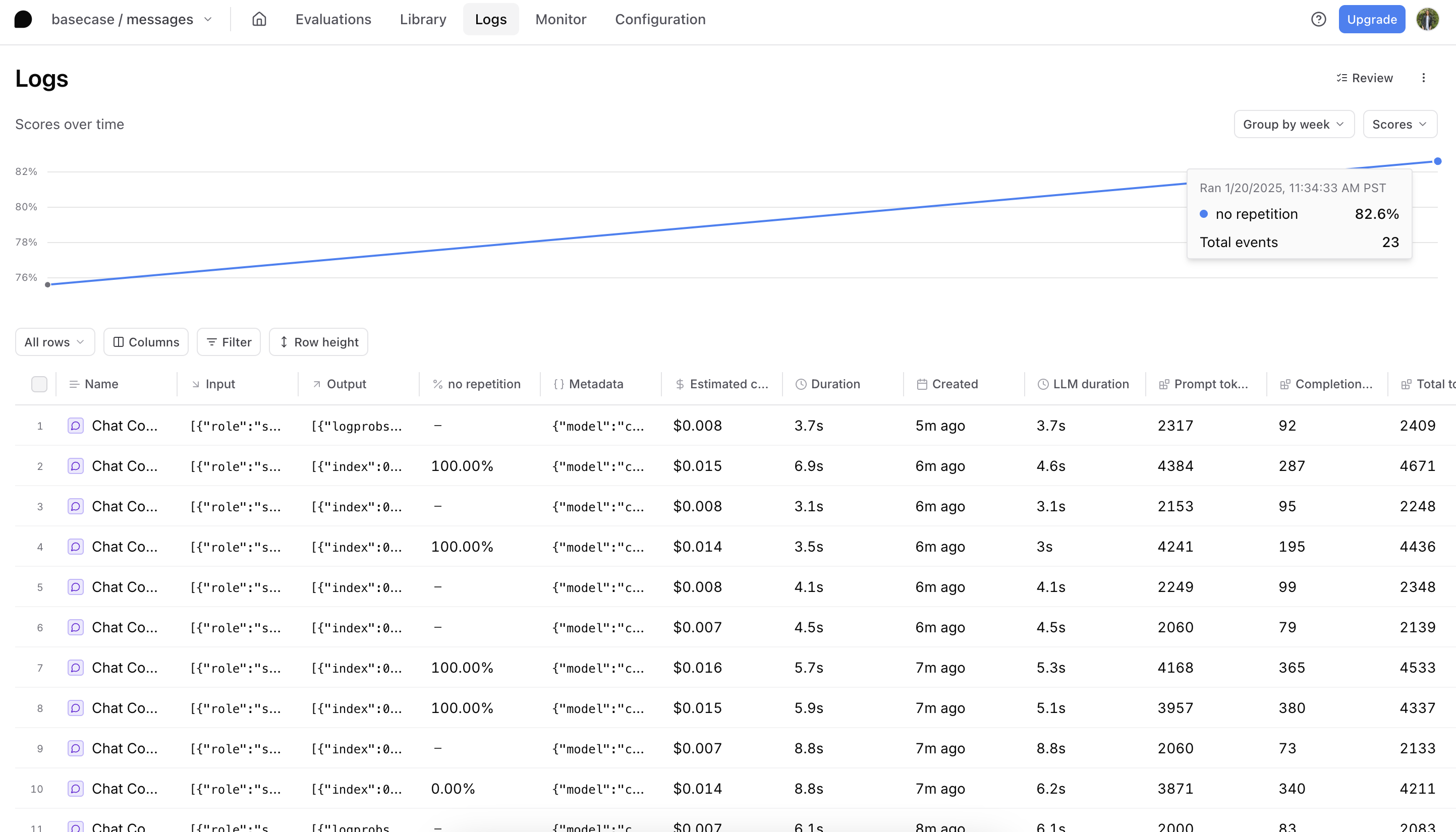

gpt-4o-mini,claude-3-5-sonnet-latest,grok-beta) and log everything for evals. Claude-3-5-sonnet-latest produced the most human-like interactions in my tests, so I settled on that.

-

Handling user interruptions: Coordinating multiple threads, user messages, AI concurrency, and reaction timing required careful engineering. In real life, group chats are chaotic, with overlapping messages and constant recontextualization. I ultimately designed a priority-based queue with conversation versioning to keep the experience smooth for users.

-

Concurrent conversations: I initially overlooked the idea of having multiple group chats active at once. In testing, I realized I wanted each conversation to continue even if I started a new one. With a few tweaks, I ended up with a design that allows any number of chats to run simultaneously, each with its own queue, tasks, and state.

A big thank you to Adrian, Ankur, Eden, JJ, Ornella, Riley, Sharif, & Spencer for their feedback and help tackling these challenges. I should also give a shout out to Windsurf for helping me write a lot of this code.

If you're curious about the nitty-gritty:

-

/api/chat/route.ts The API endpoint for composing prompts and fetching responses from the AI model.

-

/lib/messages/message-queue.ts The heart of the conversation flow: it manages priority-based tasks, timing delays, reaction logic, and more.

-

/data/messages/initial-contacts.ts Where I store each persona's style prompt—like Elon Musk, Steve Jobs, etc.

It's certainly not perfect, and I imagine there are countless ways to improve it. If you have questions or ideas for making it better, feel free to reach out on X.

Closing thoughts

Of course, building the backend was only half of the battle. Making it look and feel exactly like iMessage required its own kind of wizardry - little details like the background gradient, mentions, message tails, and sound effects took a lot of effort, but were well worth it to get just right. Perhaps that's a subject for another blog post.

Try it out for yourself and spin up your own conversations with people who inspire you. It's quite fun (and sometimes hilarious) to partake in the conversation or sit back and watch the chaos unfold!